Evaluation provides a systematic method to study program, practice, intervention or initiative to understand how well it achieves its goals. Evaluations help determine what works well, what could be improved, and whether an approach can be replicated.

There is not a one-size fits all approach for evaluating programs and interventions. The AmeriCorps evaluation readiness resources are available to support organizations in their learning and knowledge capacity building.

Featured Resources

- Use the SCALER tool to assess whether an intervention is ready to successfully scale and extend its impact.

- Use the Organizational Capacity Assessment Tool (OCAT) to assess your organization's strengths, clarify perceptions, and plan strategies to enhance capacity in identified areas.

Basic Steps in Conducting an Evaluation: This course describes the basic steps for conducting an evaluation including planning, collecting and analyzing data, and communicating and applying findings.

- Evaluation Basic Steps Slides (PDF)

Jump to section

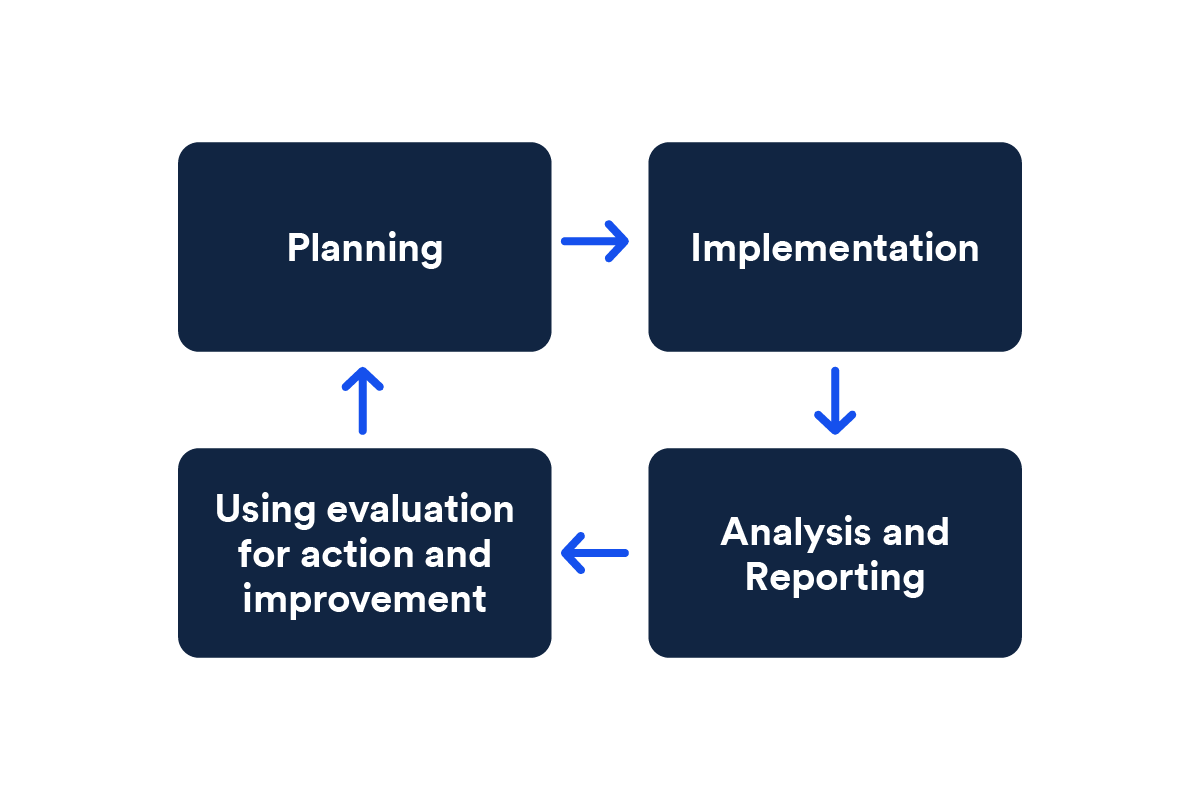

Planning → Implementation → Analysis and Reporting → Using evaluation

Laying the Groundwork Before Your First Evaluation

Laying the Groundwork Before Your First Evaluation: This presentation covers five foundational activities that programs should undertake during their first grant cycle so that they are ready to do an evaluation when they receive recompete funding for a second grant cycle.

Logic Models

Logic Models: This course introduces the key components of a logic model and discusses how logic models can be used to support daily program operations and evaluation planning.

- Logic Model Slides (PDF)

Developing Research Questions

Developing the Right Research Questions: This presentation describes the importance of research questions in overall program evaluation design, identifies the four basic steps for developing research questions, and demonstrates how to write strong research questions for different types of evaluation designs (i.e., process evaluation and outcome evaluation).

Designing an Evaluation

Overview of Evaluation Design: This course explains different types of evaluation designs, the differences between them, the key elements of each, as well as considerations in selecting a design for your AmeriCorps program evaluation.

- Evaluation Design Slides (PDF)

- Handout # 1 (DOC) (PDF)

- Handout # 2 (DOC) (PDF)

- Handout # 3 (DOC) (PDF)

Additional Resources:

- SIF Evaluation Plan (SEP) Guidance (PDF)

Writing an Evaluation Plan

How to Write an Evaluation Plan: This course explains the purpose of an evaluation plan and outlines the key sections of the plan and what should be included in each section.

- Writing an Evaluation Plan Slides (PDF)

- Example Evaluation Plan Component Write Ups (PDF)

- Sample evaluation plan checklist (PDF)

- Example Impact Evaluation Plan (PDF)

- Example Process Evaluation Plan, Comprehensive (PDF)

- Example Process Evaluation Plan, Standard (PDF)

- Example Non-Experimental Evaluation Plan (PDF)

Additional Resources:

- SIF Evaluation Plan (SEP) Guidance (PDF)

Budgeting for an Evaluation

Budgeting for Evaluation: This document discusses the key components of an evaluation budget and strategies for creating an evaluation budget. (PDF)

Recruiting and Managing an Evaluator

Managing an External Evaluator: This presentation describes how to manage an external evaluator and is intended for grantees that are considering or will be undertaking an external evaluation of their program.

Additional Resources

- Assessing and Building Local Evaluation Networks Webinar (PPT) (PDF)

(The content of the Assessing and Building Local Evaluation Networks Webinar is contained in the PowerPoint slides. There is no accompanying audio recording) - Administrative Data (web page)

- Examples of Effective SEPs (web page)

- How program managers can use low-cost experiments to improve results: A video overview (web page)

- Working with Institutional Review Boards (PDF)

Data Collection

Data Collection: This course will address key questions to consider prior to selecting a data collection method; the importance of selecting appropriate methods; the advantages and disadvantages of a variety of methods; and the difference between quantitative and qualitative methods and their roles in process and outcome evaluations.

- Data Collection Slides (PDF)

Family Education Rights and Privacy Act (FERPA): These notes summarize a presentation made to School Turnaround AmeriCorps grantees about obtaining and sharing data with schools while adhering to FERPA privacy laws. The handout, from the Privacy Technical Assistance Center (PTAC) at the Department of Education, provides more detailed information on navigating FERPA.

Additional Resources:

- SIF Secondary/Administrative Data Use: Accessing Restricted-Use Data (PDF)

Reporting

Reporting and Using Evaluation Results: This course will help AmeriCorps State and National programs understand the importance of communicating and disseminating evaluation results to stakeholders; write an evaluation report and become familiar with other key reporting tools; and determine meaningful programmatic changes based on evaluation findings and learn how to implement them.

- Reporting and Using Evaluation Results Slides (PDF) (PPT)

- Pre-work Handout (PDF)

- Dissemination Plan Example (PDF) (XLS)

- Access the recording of the Reporting and Using Evaluation Results presentation held on June 18, 2015 here. (Description of audio.)

Additional Resources:

Using Results for Program Improvement

Reporting and Using Evaluation Results: This course will help AmeriCorps State and National programs understand the importance of communicating and disseminating evaluation results to stakeholders; write an evaluation report and become familiar with other key reporting tools; and determine meaningful programmatic changes based on evaluation findings and learn how to implement them.

Creating a Long-Term Research Agenda

Developing a Long-Term Research Agenda: This course will help participants recognize the importance of building a long-term research agenda; identify the various stages in building evidence of a program’s effectiveness; and understand the key questions to consider prior to developing a long-term research agenda.

- Developing a Long-Term Research Agenda Slides (PDF)

Evaluation Examples

Scaling Evidence-Based Models (SEBM) Project

The Office of Research and Evaluation initiated the Scaling Evidence-Based Models project to support the scaling of effective interventions. The project includes guides, research reports, case studies, and tools that contribute to the study and application of scaling effective interventions.

Guides:

- Scaling an Intervention: Recommendations and Resources: The guide provides five key recommendations that will help funders like AmeriCorps, other government agencies, and philanthropic organizations identify which funded interventions are effective, enhance their knowledge base on scaling them, and pursue scaling.

- How to Fully Describe an Intervention: This guide is intended to help practitioners to thoroughly describe their intervention and communicate the following to potential funders or stakeholders.

- Build Organizational Capacity to Implement an Intervention: This guide will help practitioners prepare to implement their desired intervention through building organizational capacity, which involves establishing the organizational structure, workforce, resources, processes, and culture to enable success.

- How to Structure Implementation Supports: This guide will help practitioners develop formal strategies (also known as implementation supports) to help consistently deliver an intervention as it was designed, which is especially helpful for organizations scaling an intervention and assessing implementation fidelity.

- Making the Most of Data: This guide will help practitioners maximize the use of their intervention data to help their organizations improve program implementation and provide evidence to funders about effectiveness.

- What Makes for a Well-Designed, Well-Implemented Impact Study: This guide is intended to help practitioners ensure that their evaluators produce high-quality impact studies.

- Baseline Equivalence: What it is and Why it is Needed: This guide is designed to help practitioners and researchers work together to design an impact study with baseline equivalence and in turn learning how to determine if an impact study is likely to produce meaningful results.

- Scaling Programs with Research Evidence and Effectiveness (SPREE): This article focuses on how the foundations can apply the SPREE process and provides insights into conditions that can help identify and support effective interventions that are ready to be scaled.

- Scaling Evidence-Based Models: Document Review Rubrics: The guide is a two-part rubric for systematically reviewing documents that will help practitioners to identify the critical components of intervention effectiveness and describe plans for scaling the effective intervention.

Research Reports:

- Planned Scaling Activities of AmeriCorps-Funded Organizations: This report presents scaling readiness findings for a cohort of 25 organizations that provided plans to scale interventions with evidence of effectiveness and it examines variations in demonstrated scaling readiness across AmeriCorps funding years.

- Evidence of Effectiveness in AmeriCorps-Funded Organizations: This report is designed to help inform the agency’s interest in identifying the intervention components that are critical for an intervention’s effectiveness.

Case Studies:

- Scaling the Birth and Beyond (B&B) Intervention: Insights from the Experiences of the Child Abuse Prevention Council (CAPC): This case study describes the scaling of Birth and Beyond (B&B), a parenting education and support intervention designed to reduce child maltreatment, by the Child Abuse Prevention Council of Sacramento (CAPC) and its partners.

- Scaling the Reading Corps Intervention: Insights from the Experiences of the United Way of Iowa: This case study describes the scaling of Reading Corps, a literacy intervention designed to improve reading proficiency by United Ways of Iowa (UWI) and its partners.

- Scaling the Home Instruction for Parents of Preschool Youngsters (HIPPY) Intervention: Insights from the Experiences of Parent Possible: This case study describes the scaling of Home Instruction for Parents of Preschool Youngsters (HIPPY), a home-visiting intervention that seeks to help parents improve their young children’s development, by Parent Possible and its partners.

- Scaling Evidence-Based Interventions: Insights from the Experiences of Three Grantees: This report presents insights from a cross-site analysis of process case studies conducted with three AmeriCorps grantees engaged in scaling.

Tools:

- Scaling Checklists: Assessing Your Level of Evidence and Readiness (SCALER): This report describes a framework that identifies how organizations can improve both their readiness to scale an intervention and the intervention’s readiness to be scaled, so that intervention services are best positioned to improve outcomes for a larger number of participants. Each checklist in the SCALER provides summary scores to reflect how ready an intervention and organization might be for scaling.

Evaluation is a powerful tool for improving a program and increasing its ability to serve people more efficiently and effectively. It gives programs an opportunity to test their interventions, adjust services to best meet community needs, and collect data to support their work.

Subscribe for our latest publications and insights on national service, social innovation, volunteering, and civic engagement.

Are we missing specific evaluation readiness resources you would like for your award? Or have feedback on the evaluation resources listed?